- English

- Francais

Canadian Linked Data Summit: A Year After

It has been a year to the day that the Canadian Linked Data Summit occurred in Montreal in 2016. The event was to push the deployment of Linked Open Data approaches in Canadian institutions who were perceived not to be as advanced as their European and American counterparts. The presentation by MJ Suhonos Linked Open Data in Canada: Behind the Curve [1] caught my eye with its candid review of the situation in Canada.

Have things changed in Canada a year later? The webpage "Linked Data Projects in Canada" compiles a listing of projects in Canada along with an evaluation grid. It shows a picture that has not improved significantly since the summit occurred. ...which itself was to be a follow up to LODLAM 2015!

Why Isn't Linked Open Data Getting Any Traction?

Given the power of Linked Open Data and the possibility of externalizing communications costs, people should be adopting this technology wholesale. So what is the holdback? Some of the issues that have been observed have been:

Selling Linked Open Data To The Wrong Audience

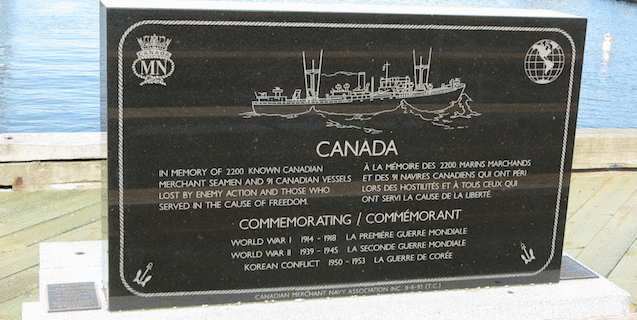

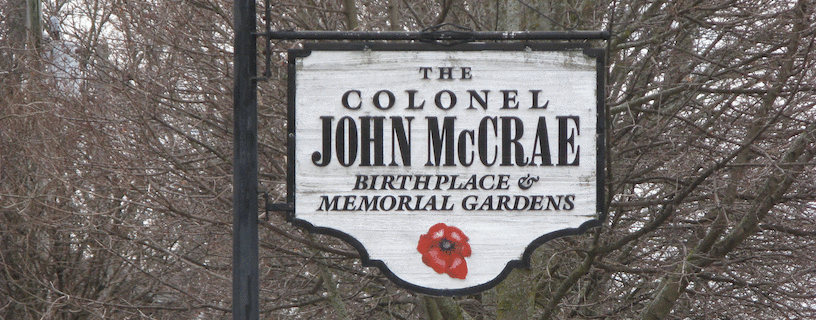

Linked Open Data is primarily about engaging with people through their software and as such they have no basis for wanting [Linked] Open Data; they deal with the end application. An anecdotal example of this audience mismatch can be found in the comments on the Soldiers of the First World War dataset located on the Open Government Portal: "The average computer user could not possibly find anything in this totally useless data base. Please restore the original search base which was user friendly"1. The actual application that the end users want is located here (and to the portal's credit, it is clearly listed at the top of the page) but the overloaded vernacular use of the "data-" words does not let end users make a distinction: "this section should be regarding the 'dataset' for the Soldiers of the First World War. For comments about the 'database' ... Yeah right, everyone understands that for sure. I think the same folks designed this as designed the Phoenix software"2.

The tension between easy-to-use applications and sophisticated datasources can similarly be seen at the ODI which has been pushing Comma Seperated Values (CSV) as a file format for data exchange for the past few years. This has been well received because anyone can consume it using excel, make a bar chart and demonstrate a simple to understand (and probably wrong3) answer. Anyone who has had to create a detailed analysis across more than one data set realizes how quickly CSVs become unmanageable, especially when trying to repeat the analysis or establish the data methodology. To this end the ODI has also been promoting the use of json schemas to document csv headings which strikes one as having all of the problems of, and none of the benefits of, the semantic web stack.

Both situations point out that data consumers are self-interested and have different levels of sophistication. Linked [Open] Data is a tremendously powerful tool for managing and exchanging of data. However it requires a high level of sophistication for its consumption, it is not an end-user facing technology and the problems that it solves have traditionally been hidden from the consumers both by the lack of available data and the application design. Examples:

- In a pen-and-paper world, a long hand reference from the Canadian Price Index as reported in 326-0021 or 326-0020 would have a narrative attached identifying which subfield was used. In a data table without a data dictionary, which of the different CPI's is being reported under the CPI table column and what will the application tell the end user about it?

- We deploy content stores with 10's of thousands of digital assets on the web searchable by keywords because that is easy for the end user to understand and use. We also expect that the user will search that particular content store because it holds the collection that they are interested in. What happens when there are 10's of thousands of content stores to choose from? 100's of thousands? Our ability to generate content is overwhelming our ability to search it and soon the shortcuts that users 'understand' will cease to be effective.

We are dangerously close to a second digital dark age where access will become impossible because we won't be able to find anything. The data mob will take us to the guillotine when told to eat semantic cake!

I do believe that Linked Open Data is our best solution yet, but we have to focus on end-user applications that solve their problem with our tools and that means eating our own semantic dog food. I go back to my previous blog post about cool tools and the deafening sound of crickets on applications, perhaps "cool tools" was the wrong title. We need to start using Linked Open Data ourselves, eat our own semantic dog food and push the technology to end users via applications that solve basic but useful problems. Developers similarly need superior tools that match those we have constructed for the previous generations of structured data.

Mismatch With Organizational Structure And Culture

Most organizations work on a model where software applications are acquired, installed and maintained by an IT department that responds to business unit needs. Linked Open Data sits uncomfortably between web-publishing and databases; it is not a ready-made shrink-wrap application and its publication functions are close enough to the web to trigger a "just use the web site we have" response. Same issue with the "SPARQL server" that needs to be firewalled from the public because the IT security policy says that databases need to be.

The same thing occurs with the marketing department which now see a new channel to the customer but devoid of the properties that they expect: branding, user experience, human interaction, look and feel. Comical conversations have ensued in the past where a business analyst deemed an API unprofitable since no ads conversion occurred or when demands made that the company logo be shown on every API call.

A move to Linked Open Data requires a period of adjustment to align the different operating procedures and policies to new technology. It can be a hard time and/or a funny one; we forget there was a time where people used to print their own email to read it! Even Amazon, the 800-pound gorilla of the SOA world, famously required a direct company-wide order from the CEO to start using APIs internally within Amazon.

Finally, there is a deeply rooted belief that more value can always be created by selling closed data sets than simply opening up the data. A recent example is the removal from the public domain of Saskatchewan's Land Dominion Survey shape files in favour of a commercial product now being sold by Information Services Corporations. There is a trust issue in linking and using someone else's data in that the data must be available in the long term for people to be willing to invest the time required. This isn't to say that there isn't room for Linked Data as well as Linked Open Data, many commercial services should be built around the technology, but consistency is a large part of creating a knowledge graph.

Why Don't We Just Put It in Wikidata (WDWJPIIW)?

When faced with limited resources, creating entries into Wikipedia / Dbpedia / Wikidata becomes attractive: we create the data but someone else gets to do all of the heavy lifting. These are ideal services especially for one-off pieces of information which when all added together make for large and diverse knowledge bases. The issues that do pop-up are of agency, mission and authority. The wiki's function in what may be best described as a chaotic democracy: reversion, corrections, deletion all occur on an ongoing basis through a process of community curation. That system is not perfect and at times mistakes, vandalism and unreasonable behaviours do occurs. One tongue-in-cheek dbpedia entry for the fictitious Official Timocracy of Sapinetia even managed to get itself inserted in a now-deprecated version of the Muninn Military ontology.

Wikipedia processes are not necessarily aligned with your own, through there is a lot of goodwill to be hand. If your own systems depend on that goodwill to function on Monday morning at 3am, your mileage may vary. As with all other crowdsourced environment, you must accept that people with much less knowledge of your area will feel free to edit your carefully curated triples, sometimes inappropriately. If you are in a situation where you can tolerate this and keep watch over your triples, then perhaps these services may be a good home for your data.

The remaining issue is one of mission and authority. When people retrieve data from any of the crowd sourced sites, they have an understanding that the data may not be authoritative. The triples are likely to be valid, but you are getting a data set that may have been edited inappropriately. At times, the truth from the proverbial horses' mouth is needed with an authoritative answer from the competent institution. If this is you, then perhaps you want to serve your own data in house since in this case the primary value of the data is it's authenticity and the fact that you are delivering it preserves the chain of trust.

Conclusion

The gains of Linked Open Data in Canada have been meagre in the past year. There have been a number of issues holding adoption back, none of which are insurmountable or inherently show-stoppers. The Linked Data Projects in Canada webpage will be updated on an ongoing basis, please feel free to email me about updates or, better yet!, new Linked Open Data sets in Canada.

(With thanks to Joel Cummings for his comments)

1. Arnold Fee, 2017-2-24

2. Tim Jaques, 2017-3-30

3. With apologies to Henry Louis Mencken.

References

- , “Linked Data in Canada: Behind the Curve”, in Canadian Linked Data Summit, Montreal, Canada, 2016.

- Log in to post comments