- English

- Francais

Linked Open Data for Ultra Realistic Simulations

One of the uncomfortable questions that is often repeated with projects generating linked open data is "So, you've created a database. Now what?". You've created the datasets, published them in linked open data and created useful API's, SPARQL endpoints and maybe even a nice html layout for the data. But how do you actually use the data and does it actually ever get used?

What we really need are smart user agents that can interpret a lot of the detail and pick out what is relevant. This has some uncomfortable connotations that we are abdicating some of our decisions to a piece of software, but to a certain extent we already do when using modern web browsers (that auto-negotiate languages) and recommendation systems when shopping online. It seems reasonable that we should extend some of the same permissions to software agents that are sifting through data. Do you really care if it makes the decision not to pick up the Esperanto language description triples on the dataset? Perhaps more importantly, as we move from data models that were first tabular, relational and then graph oriented, you have the embarrassment of choice in the data that you get and as with web search engine, a little bit of automation here won't hurt. At the core of the semantic web is the idea that the data is written for a machine that will answer a human need. And if you accept that notion, you probably don't want humans querying the data themselves or writing data so that it will be easy for humans to use but hard for a machine.

An idea that is being worked on is the use of simulations as data visualization tools. We've explored the use of various forms of visualizations, reports and graphing tool kits but at the end of the day these are only representing a few dimensions of a data set at a time. Worse, you have to know exactly what you are looking for in order to be able to query it for the visualization tool to display it properly. Of course, this is assuming that the data is fundamentally tabular: a table, a spreadsheet, elements easily classify and summarized statistically.

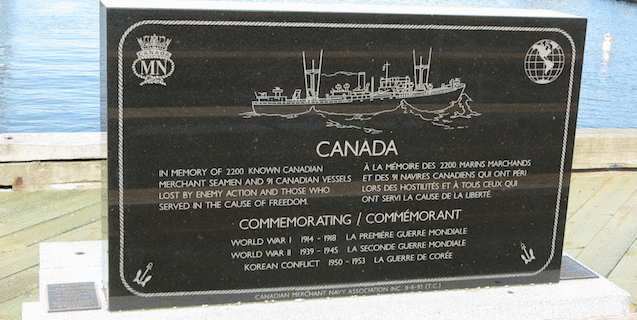

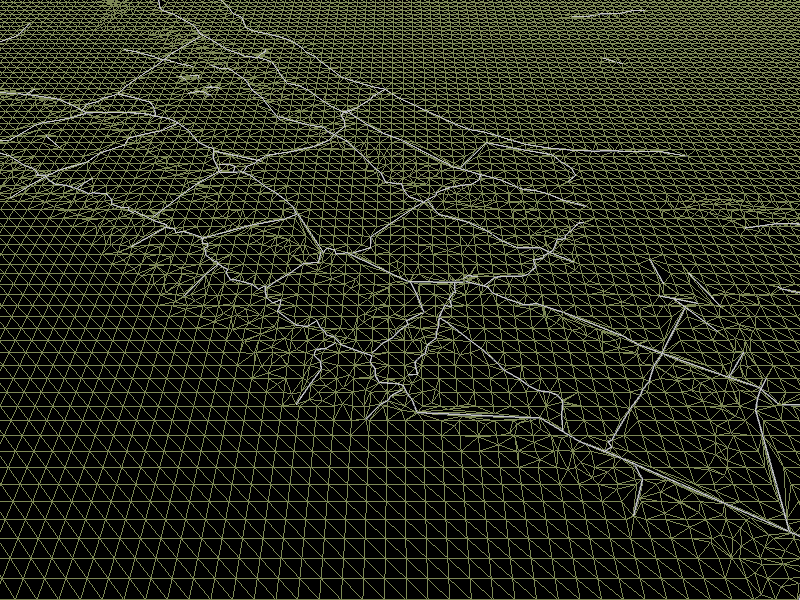

Muninn has been generating some data for a time now about various aspects of the Great War, including a lot of detailed GIS geometry data derived from trench maps of the era. The trenches that snaked across Western Europe and many of the craters of explosions are still visible today in many places. To the left is an early wireframe model of the German trenches that were part of the Hindenburgh Line at 50.1633888, 3.0499266 near Moeuvres in France. With some patience and a lot of hard work on digitization techniques, an impressive collection of historical GIS features is being generated.

As the volume of data grows in both its volume and its detail, trying to find anything in the database becomes a complex querying exercise. Qualifiers, Archival Description Formats and other clever labeling techniques won't help - there is too much data and how to represent it in a way that makes sense is annoying complicated.

In a way that is similar to what the Ordnance Survey has done with their current data and minecraft, we've been exploring the use of game engines as an information retrieval engine for linked open data. The innovation is in the simulation's ability to use multiple different sources of data concurrently to deal with unknowns in the data and estimate missing data through the use of both ontology and alternative data.

There have been games that have previously used real data in their conception, but the idea of having a game dynamically load public linked open data from a database and create the terrain in an online fashion is very novel. Unity3d was used to build the visualization while fetching triples on the fly from a SPARQL servers. In the end, we created a separate server with all of the triples that could be consumed because while Unity3D provides a means of retrieving web data, it enforces a sandbox model that favors its own style of domain permission and does not support the CORS (Cross Origin Request Security) method currently favored by LOD people. (Incidentally, is your RDF data server providing CORS?)

For all of the concerns about SPARQL data throughput, the major problem so far has to do with getting the rendering engine to keep up with that data. The screen shot on the right is an early prototype that renders the trenches of Vimy Ridge in early 1914, 3 years before the Battle of Vimy Ridge. The primary focus right now is on taking advantage of the underlying ontologies that contain the geometry (we used a hybrid of Linked Geo Data, Ordered List Ontology and W3 geo:Point's) to link to both map icons and 3D assets / terrain manipulation code at the same time.

So far, the basic terrain is working and the promise is that through linked open data we can have a flexible data manipulation infrastructure that can relate a thing to various degrees of visualization or study ranging from a 2D historical map, like Open Historical Map, all the way to immersive 3D environments or 3D printed versions of the terrain.

Lastly one of the items that is being played with is renewing the idea of book stack browsing or serendipitous discovery of material and resources. The vast majority of older items in catalogs are never viewed or retrieved. There are various reasons for this, including lack of user interest, poor information retrieval mechanism and ...possibly there isn't actually anything interesting in the document. But there might be one of two documents that are extremely important in the collection that people don't know about because we have never looked at them before. Simulations and game designers have the reverse problem in that getting content for their games that looks original and isn't repetitive is time consuming. We're trying to solve two problems at once by using the content of linked open data archival libraries to provide content to the simulation. This is also an oppourtunity to ensure that the entirety of the archives get regularly looked at and ensure that valuable documents are brought to attention.

A sample implementation and a review of the advantages, pitfalls and ways of mixing visualisations and retrieval are still being determined and the topic of an upcoming publication. Stay turned for a downloadable version shortly!

- Log in to post comments